Are you excited to explore the secrets of the least squares regression line and get ready to dive into the captivating world of statistics and data analysis? In this journey, we’ll explore this fundamental method that helps estimate the parameters of linear models and opens up a whole new realm of possibilities.

By the end of this article, not only will you be well-versed in this powerful tool, but you’ll also learn how to apply the least squares regression line in various fields and make predictions.

Lesson outcomes

By the end of this article, you’ll have achieved the following learning outcomes:

- Grasp the math behind the least squares regression line and how to calculate it by hand.

- Understand the wide range of practical applications across various fields.

- Become aware of the important limitations and assumptions of the method.

- Learn how to evaluate the performance of a least squares regression line model.

- Discover advanced regression techniques to enhance your models.

- Feel confident applying the least squares regression line in your work and everyday life.

So, let’s jump right in and start unravelling its mysteries!

The Math Behind the Least Squares Regression Line

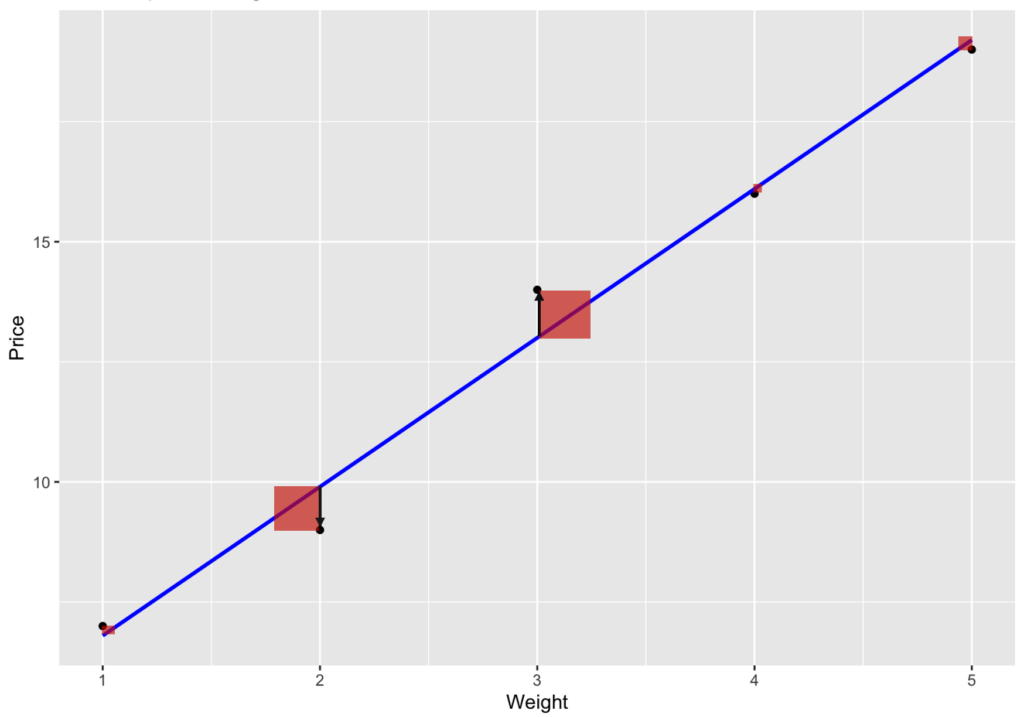

Let’s start by breaking down the mathematics behind the least squares regression line. Our goal is to find a line that minimizes the sum of the squared differences between the actual data points and the predicted values.

Sounds complicated? Don’t worry; we’ll walk you through it step by step.

First, we need to minimize the following objective function by finding the optimal values of the slope (β) and the intercept (α) that minimize the sum of the squared differences between the observed data points (yi) and their corresponding predicted values (α + βxi) on the regression line.

\sum (y_i - (\alpha + \beta x_i))^2

Where:

∑ = the sum of the squared differences for all data points,

(yi – (α + βxi))2 = the squared difference between the actual value (yi) and the predicted value (α + βxi) for a given data point i.

NOTE: In the context of the least squares regression line, “Minimize” refers to the process of finding the best-fitting line by minimizing the sum of the squared differences between the actual data points (yi) and the predicted data points (α + βxi) on the regression line.

To solve this, we can use calculus or matrix algebra. Let’s go with calculus this time.

Step 1: Calculate the partial derivatives

The following equations represent the partial derivatives of the residual sum of squares (RSS) with respect to the parameters α (intercept) and β (slope) in the least squares linear regression model. They are used to find the optimal values of α and β that minimize the RSS, which is a measure of the model’s error.

\frac{\partial}{\partial \alpha}: \sum 2(y_i - (\alpha + \beta x_i))(-1)\frac{\partial}{\partial \beta}: \sum 2(y_i - (\alpha + \beta x_i))(-x_i)

These equations are derived using the chain rule in calculus, which states that the derivative of a composite function is the product of the derivative of the outer function with respect to the inner function and the derivative of the inner function with respect to the variable of interest.

Step 2: Set the partial derivatives equal to zero

By setting these partial derivatives equal to zero and solving the resulting system of equations for α and β, we can find the values of α and β that minimize the RSS and provide the best fit for the data in the least squares linear regression model. Here is how to do that:

\sum (y_i - (\alpha + \beta x_i))(-1) = 0

\sum (y_i - (\alpha + \beta x_i))(-x_i) = 0

Step 3: Simplify and rearrange the equations

Now, we can simplify these equations by distributing the summation and removing the constants:

\sum y_i - \sum \alpha - \sum \beta x_i = 0

\sum x_i y_i - \sum \alpha x_i - \sum \beta x_i^2 = 0

These simplified equations are easier to work with and help us find the optimal values of α and β more efficiently. Notice that ∑α in the first equation is equivalent to nα, where n is the number of data points since α is a constant term. Similarly, ∑αxᵢ in the second equation is equivalent to α∑xᵢ, as α is constant with respect to xᵢ.

Step 4: Express ∑α and ∑αxᵢ in terms of n (number of data points)

We can further simplify these equations by recognizing that α is a constant term, and thus:

∑α = nα (since there are n data points)

∑αxᵢ = α∑xᵢ (because α is constant with respect to xᵢ)

Substituting these expressions into the original equations, we get:

n \alpha + \beta \sum x_i = \sum y_i

\alpha \sum x_i + \beta \sum x_i^2 = \sum x_i y_i

Now we have a system of two linear equations with two unknowns, α and β. These equations are called normal equations. Solving this system of equations allows us to find the optimal values of α and β that minimize the RSS, resulting in the best-fit line for the least squares linear regression model.

Step 5: Solve the system of linear equations for α and β

To find the optimal values of α and β that minimize the residual sum of squares (RSS), we need to solve this system of linear equations. Using either substitution or elimination, we can derive the following closed-form expressions for α and β:

\begin{align*}

\alpha &= \frac{(\sum y_i)(\sum x_i^2) - (\sum x_i)(\sum x_i y_i)}{n(\sum x_i^2) - (\sum x_i)^2} \\

\\

\beta &= \frac{n(\sum x_i y_i) - (\sum x_i)(\sum y_i)}{n(\sum x_i^2) - (\sum x_i)^2}

\end{align*}

Where:

- α and β = the intercept and slope of the best-fit line, respectively.

- n = the number of data points in the dataset.

- ∑xᵢ, ∑yᵢ, and ∑xᵢyᵢ = the sums of the predictor variable values (x), response variable values (y), and their products (x * y), respectively.

- ∑xᵢ² = the sum of the squared predictor variable values.

Once you have calculated the optimal values of α and β using these equations, you can plug them into the linear regression equation, y = α + βx, to make predictions for new data points and assess the model’s accuracy.

Example of calculus for least squares linear regression

Let’s use the equations derived above to find the optimal values of α (intercept) and β (slope) for the least squares regression line using the example dataset with heights and weights. Here’s the data:

| Height (xᵢ) | Weight (yᵢ) |

|---|---|

| 1.6 | 50 |

| 1.7 | 53 |

| 1.75 | 56 |

| 1.8 | 60 |

| 1.85 | 65 |

1. Calculate the necessary sums using the values for (xᵢ) and (yᵢ) in the table above:

\sum x_i = 1.6 + 1.7 + 1.75 + 1.8 + 1.85 = 8.7

\sum y_i = 50 + 53 + 56 + 60 + 65 = 284

\sum x_i^2 = 1.6^2 + 1.7^2 + 1.75^2 + 1.8^2 + 1.85^2 = 14.9775

\sum x_i y_i = 1.6 \cdot 50 + 1.7 \cdot 53 + 1.75 \cdot 56 + 1.8 \cdot 60 + 1.85 \cdot 65 = 480.975

n (number of data points) = 5

2. Apply the formulas for α and β:

\alpha = \frac{(284)(14.9775) - (8.7)(480.975)}{(5)(14.9775) - (8.7)^2} \approx -39.062 \\\beta = \frac{(5)(480.975) - (8.7)(284)}{(5)(14.9775) - (8.7)^2} \approx 37.154So, the least squares regression line for the given height-weight data is approximate:

\text{Weight} = -39.062 + 37.154 \cdot \text{Height}

3. Make predictions using the model:

Since we have already calculated the optimal values of α (intercept) and β (slope) for the least squares regression line using the given height-weight dataset, let’s explore how to use this model to make predictions and assess its accuracy.

Let’s try to predict the weight for a given height in our data set. For example, if we want to predict the weight of someone with a height of 1.9 meters, we would plug the height value into the above equation:

\text{Predicted Weight} = -39.062 + 37.154 \cdot 1.9 \approx 70.930

So, the predicted weight for a person with a height of 1.9 meters is approximately 70.93 kg.

4. Assess the Model’s Accuracy:

To assess the accuracy of the least squares regression line model, you can calculate the residual sum of squares (RSS), which is the sum of the squared differences between the actual weights (yᵢ) and the predicted weights (ŷᵢ) for each data point:

\text{RSS} = \sum (y_i - \hat{y}_i)^2

For our height-weight dataset, calculate the predicted weights (ŷᵢ) for each height value (xᵢ) using the regression line equation:

| Height (xᵢ) | Actual Weight (yᵢ) | Predicted Weight (ŷᵢ) |

|---|---|---|

| 1.6 | 50 | 20.183 |

| 1.7 | 53 | 24.039 |

| 1.75 | 56 | 28.336 |

| 1.8 | 60 | 32.193 |

| 1.85 | 65 | 36.051 |

Now, let’s calculate the RSS:

\text{RSS} = (50 - 20.183)^2 + (53 - 24.039)^2 + (56 - 28.336)^2 + (60 - 32.193)^2 + (65 - 36.051)^2 \approx 4477.535

The lower the RSS value, the better the model fits the data. However, comparing RSS values across different datasets or models can be misleading because the scale of the values may differ. To obtain a more interpretable measure of the model’s accuracy, you can calculate the coefficient of determination (R²)

R^2 = 1 - \frac{\text{RSS}}{\text{TSS}}

Where:

TSS = the total sum of squares, which is the sum of the squared differences between the actual weights (yᵢ) and the mean weight (ȳ):

\text{TSS} = \sum (y_i - \bar{y})^2

First, calculate the mean weight (ȳ):

\bar{y} = \frac{(50 + 53 + 56 + 60 + 65)}{5} = 56.8

Next, calculate the TSS:

\text{TSS} = (50 - 56.8)^2 + (53 - 56.8)^2 + (56 - 56.8)^2 + (60 - 56.8)^2 + (65 - 56.8)^2 \approx 317.6

Finally, calculate the R²:

R^2 = 1 - \frac{4477.535}{317.6} \approx -13.096

In this case, the R² value is negative, which indicates that the least squares regression line model does not fit the data well. Generally, an R² value ranges from 0 to 1, with higher values indicating a better model fit.

A negative R² value is possible when the model fits the data worse than a horizontal line through the mean of the response variable (weight, in this case). This could be due to errors in the calculations, a non-linear relationship between height and weight, or other factors.

Limitations and Assumptions of Least Squares Regression Line

While the regression line least squares method offers valuable insights, it comes with certain limitations and assumptions that we need to consider:

Linearity: The method assumes a linear relationship between the independent and dependent variables. If this assumption is not met, the model may not provide accurate predictions. Suppose we want to predict the growth of a plant based on the amount of sunlight it receives. If the relationship between sunlight and growth is not linear (e.g., it follows an exponential growth pattern), then a least squares regression line would not provide accurate predictions. Here you can learn more about why linearity is important in statistics.

Independence: The observations should be independent of each other. If there is a correlation between the observations, it may affect the reliability of the model. In a study examining the effect of a new medication on blood pressure, if the participants are all from the same family, their blood pressure levels may be correlated due to shared genetics. This could affect the independence of the observations and lead to biased results. Here are four reasons why independence is a big deal in statistics.

Homoscedasticity: The method assumes that the variance of the error terms is constant across all levels of the independent variable. If this assumption is not met, the model may be biased. Let’s examine the relationship between income and education level. The variance of incomes may be much larger for individuals with higher education levels compared to those with lower education levels. In this case, the assumption of homoscedasticity is not met, and the least squares regression line may be biased.

Normality: The error terms should be normally distributed. If this assumption is violated, it can affect the validity of the model’s statistical inferences. Say we’re examining the relationship between age and lung capacity. If the distribution of the error terms is heavily skewed (e.g., due to a small number of extremely high or low lung capacity values), the normality assumption is violated. This can affect the validity of any conclusions we draw from the model.

Evaluating the Performance of a Least Squares Regression Line Model

To assess the performance of a regression line least squares model, several statistical measures can be employed:

Coefficient of Determination (R-squared): This measure indicates the proportion of the variance in the dependent variable that the independent variable can explain. An R-squared value close to 1 indicates a strong relationship between the variables, while a value close to 0 indicates a weak relationship.

Standard Error of the Estimate: This metric provides an estimate of the average deviation of the observed values from the predicted values. A smaller standard error indicates a better model fit.

t-statistic and p-value: The t-statistic and p-value help determine the statistical significance of the model’s parameters. A low p-value (typically below 0.05) indicates that the parameters significantly differ from zero, suggesting a meaningful relationship between the variables.

Residual Analysis: Examining the residuals (the differences between observed and predicted values) can reveal potential issues with the model, such as non-linearity, heteroscedasticity, or outliers.

Enhancing Least Squares Models Regression Line with Advanced Techniques

If you’re dealing with more complicated data or relationships, you can level up your regression game with some advanced techniques to make your model even better at predicting stuff:

- Multiple Regression: This method takes basic linear regression up a notch by including more than one independent variable, giving you a fuller picture of how everything’s connected.

- Polynomial Regression: Got a curvy relationship instead of a straight line? No worries! Polynomial regression lets you model non-linear relationships by adding higher-order terms to the mix.

- Ridge and Lasso Regression: If you’re running into problems like multicollinearity or overfitting, these regularization techniques can save the day by making your model more stable and accurate.

- Generalized Linear Models (GLMs): GLMs are the superheroes of regression, letting you handle non-linear relationships and funky distributions for your dependent variable so that you can use regression on all kinds of data.

There you have it! With these advanced techniques, you can tackle even the trickiest regression problems.

Practical Applications of Regression Line Least Squares

The least squares regression line pops up in all sorts of areas, and you’ll find it super useful in a bunch of fields, like:

- Economics: Economists love using regression to find connections between things like income and spending or to guess what’s coming up next based on past data.

- Finance: Financial gurus use regression to make educated guesses about stock prices, determine how risky a portfolio is, or see if an investment strategy is working out.

- Marketing: Marketing peeps turn to regression to see how well their ad campaigns boost sales or pinpoint what makes customers tick.

- Healthcare: Healthcare researchers dig into regression to find links between risk factors and health results or to check how well different treatments work.

- Environmental Science: Environmental scientists use regression to see how pollution affects air quality or to predict what climate change might do to all the plants and animals living in an ecosystem.

So, as you can see, the least squares regression line is a handy tool for all kinds of people in different fields!

Conclusion

So, there you have it! The least squares regression line is a super useful tool for understanding and predicting relationships between variables, and it’s got loads of applications across different fields.

Don’t forget that there are some assumptions and limitations to keep in mind, but with advanced techniques in your toolkit, you can take on more complex data and make even better predictions. Now you’re ready to rock the world of regression! 🚀